Difference between revisions of "OVH NVME atop Reporting Problem"

From Kolmisoft Wiki

Jump to navigationJump to search

| Line 11: | Line 11: | ||

chmod +x /etc/rc.d/rc.local | chmod +x /etc/rc.d/rc.local | ||

echo "# fixing messed up nvme disk usage reporting in the atop for OVH server" >> /etc/rc/rc. | echo "# fixing messed up nvme disk usage reporting in the atop for OVH server" >> /etc/rc.d/rc.local | ||

echo "echo mq-deadline > /sys/block/nvme0n1/queue/scheduler" >> /etc/rc/rc. | echo "echo mq-deadline > /sys/block/nvme0n1/queue/scheduler" >> /etc/rc.d/rc.local | ||

echo "echo mq-deadline > /sys/block/nvme1n1/queue/scheduler" >> /etc/rc/rc. | echo "echo mq-deadline > /sys/block/nvme1n1/queue/scheduler" >> /etc/rc.d/rc.local | ||

reboot | reboot | ||

Revision as of 15:31, 13 August 2020

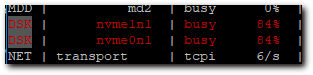

Upon installation empty idle server with NVME disks reports unusual high NVME disk usage using atop command:

It seems to be caused by the error in the kernel: https://github.com/Atoptool/atop/issues/47

As OVH kernel upgrade procedure is messed up, and new kernels (as of 2020-08-13) does not have a fix, a faster workaround is to set the NVMe device's I/O scheduler to 'mq-deadline' instead of the default 'none'

On Centos 7 this can be done like this:

chmod +x /etc/rc.d/rc.local echo "# fixing messed up nvme disk usage reporting in the atop for OVH server" >> /etc/rc.d/rc.local echo "echo mq-deadline > /sys/block/nvme0n1/queue/scheduler" >> /etc/rc.d/rc.local echo "echo mq-deadline > /sys/block/nvme1n1/queue/scheduler" >> /etc/rc.d/rc.local reboot

Make sure you actually have nvme0n1 and nvme1n1 devices on your system. Check with atop and change accordingly.

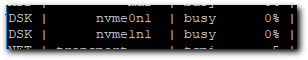

After that problem is gone: